Broadly speaking, you can divide the history of computers into four periods: the mainframe, the mini, the microprocessor, and the modern post-microprocessor. The mainframe era was characterized by computers that required large buildings and teams of technicians and operators to keep them going. More often than not, both academics and students had little direct contact with the mainframe—you handed a deck of punched cards to an operator and waited for the output to appear hours later. During the mainfame era, academics concentrated on languages and compilers, algorithms, and operating systems.

The minicomputer era put computers in the hands of students and academics, because university departments could now buy their own minis. As minicomputers were not as complex as mainframes and because students could get direct hands-on experience, many departments of computer science and electronic engineering taught students how to program in the native language of the computer—assembly language. In those days, the mid 1970s, assembly language programming was used to teach both the control of I/O devices, and the writing of programs (i.e., assembly language was taught rather like high level languages). The explosion of computer software had not taken place, and if you wanted software you had to write it yourself.

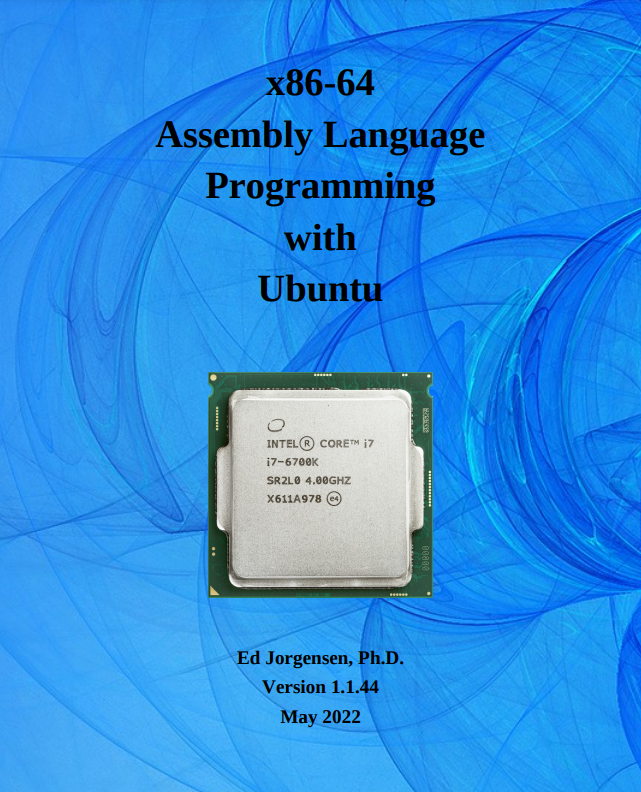

The late 1970s saw the introduction of the microprocessor. For the first time, each student was able to access a real computer. Unfortunately, microprocessors appeared before the introduction of low-cost memory (both primary and secondary). Students had to program microprocessors in assembly language because the only storage mechanism was often a ROM with just enough capacity to hold a simple single-pass assembler.

The advent of the low-cost microprocessor system (usually on a single board) ensured that virtually every student took a course on assembly language. Even today, most courses in computer science include a module on computer architecture and organization, and teaching students to write programs in assembly language forces them to understand the computer’s architecture. However, some computer scientists who had been educated during the mainframe era were unhappy with the microprocessor, because they felt that the 8-bit microprocessor was a retrograde step—its architecture was far more primitive than the mainframes they had studied in the 1960s.

The 1990s is the post-microprocessor era. Today’s personal computers have more power and storage capacity than many of yesterday’s mainframes, and they have a range of powerful software tools that were undreamed of in the 1970s. Moreover, the computer science curriculum of the 1990s has exploded. In 1970 a student could be expected to be familiar with all field of computer science. Today, a student can be expected only to browse through the highlights.

The availability of high-performance hardware and the drive to include more and more new material in the curriculum, has put pressure on academics to justify what they teach. In particular, many are questioning the need for courses on assembly language.

If you regard computer science as being primarily concerned with the use of the computer, you can argue that assembly language is an irrelevance. Does the surgeon study metallurgy in order to understand how a scalpel operates? Does the pilot study thermodynamics to understand how a jet engine operates? Does the news reader study electronics to understand how the camera operates? The answer to all these questions is “no”. So why should we inflict assembly language and computer architecture on the student?

First, education is not the same as training. The student of computer science is not simply being trained to use a number of computer packages. A university course leading to a degree should also cover the history and the theoretical basis for the subject. Without a knowledge of computer architecture, the computer scientist cannot understand how computers have developed and what they are capable of.